What Is Clustering? Definition, Common Queries And Examples

Clustering may be a class of algorithms in machine learning that types knowledge into similar teams. The advantage of aggregation is that the strategy, as Associate in Nursing unsupervised machine learning formula, doesn’t need any previous information of the info and therefore operates strictly on similarities inside the info.

The employment of agglomeration algorithms is incredibly standard, from grouping customers or merchandise to outlier detection in banking as a spam filter. This text can begin with a definition of agglomeration before introducing the various ways and algorithms.

Definition of clustering: what’s it?

Simply put, accumulation may be a machine learning methodology of organizing knowledge points into teams. Similarities within the knowledge (e.g., similar age, same gender) square measure accustomed determine teams that square measure as undiversified as doable (e.g., young, male people).

Agglomeration works here while not knowing that entries square measure similar; however, it calculates the similarities strictly supports the info. Agglomeration is, therefore, an appropriate methodology to get teams or segments while not previous information and to derive information from them.

The goals of victimization agglomeration are roughly classified into two classes. The primary class aims to mix similar knowledge points and therefore scale back complexness.

The opposite class tries to spot knowledge points that don’t belong to an outsized cluster and thus have special options. This class is termed outlier detection. In each class, the aim is to spot similar teams to implement fittingly custom-made measures.

There square measure several topics within which this information gain is applied. Whether or not client agglomeration, product aggregation, fraud detection, or spam filter – accumulation may be a versatile approach in machine learning and knowledge science.

CLUSTERING AS A WAY IN UNSUPERVISED MACHINE LEARNING

Clustering as a way belongs to the sphere of machine learning, German machine learning. It’s a lot of exactly classified as “unsupervised machine learning,” that is, unsupervised learning. Unsupervised learning means the info doesn’t contain any target variables on that the formula is familiarized. The patterns and associations square measure calculated strictly on the info itself.

Since agglomeration may be a methodology in machine learning, it also falls into the computing class to German computing (AI). Computing algorithms learn from the knowledge and might extract patterns or information while not setting rules. Therefore, accumulation is especially fascinating to use in data processing to look at existing knowledge for unknown factors.

Examples of the employment of agglomeration in corporations

CUSTOMER GROUPING AND CLIENT SEGMENTS VICTIMIZATION AGGLOMERATION

A very common space of application for accumulation is in promoting and merchandise development. The formula is employed here to spot pregnant segments of comparable customers.

The master knowledge supports the similarity (e.g., age, gender), dealings knowledge (e.g., range of purchases, go-cart value), or alternative behavioral knowledge (e.g., many service requests, length of membership within the loyalty program).

Once client clusters are known, a lot of individual campaigns are unrolled. For instance, a customized newssheet, individual offers, differing service contracts, or alternative promotions result from this higher client understanding.

CLUSTERING AS A SPAM FILTER

Another fascinating example of agglomeration in everyday use is its use as a spam filter. Meta-attributes of e-mails (for example, length, character distribution, attributes via the header …) square measure accustomed separate spam from real e-mails.

PRODUCT DATA ANALYSIS: TEAMS, QUALITY, AND MORE

Another example of agglomeration employment in corporations is the use of aggregation in product knowledge analysis. Product knowledge square measures central master knowledge in each company. The square measure is usually thought of as under-maintained and unclear whether they have the most effective structure.

Clustering will facilitate, for instance, to develop class trees {and product|and merchandise|and merchandise} knowledge structures by victimization the similarity of product classes or individual products supported their master knowledge. The worth strategy may also be supported to envision that merchandise square measure is similar and, therefore, probably be constant value vary.

Product knowledge quality is cited as an extra space of application within the product knowledge surroundings. Agglomeration will facilitate to spot that merchandise show poor knowledge quality and, supported this, create recommendations for a correction.

FRAUD DETECTION USING CLUSTERING

An example from that each shopper and firm profit is outlier detection within the sense of fraud detection. Banks and MasterCard corporations square measure active during this space and use (among alternative things) agglomeration to notice uncommon transactions and mark them for review.

What ways and algorithms square measure there in clustering?

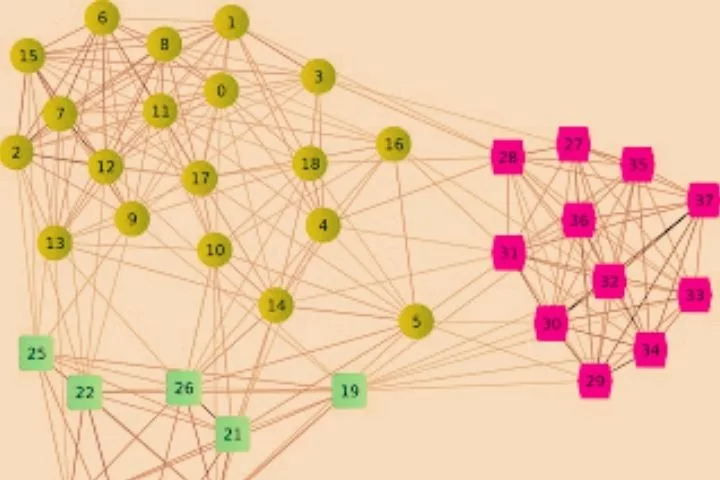

The behavior of various agglomeration algorithms and their property. ( Source )

In accumulation, as in several alternative areas of machine learning, there’s currently an outsized type of way to be used. Every formula will deliver a specific result, counting on the appliance and, above all, the information. I deliberately leave it open that “different” isn’t perpetually higher or worse.

As a result of knowledge is sorted and separated in many ways, particularly once speaking of a high-dimensional house. This makes agglomeration thus complex: to use the correct formula for the appliance at hand.

In the following, we might wish to introduce four of the foremost outstanding agglomeration algorithms before we tend to describe completely different algorithms, a minimum of in short.

K-MEANS AS AN EXAMPLE OF PARTITIONING CLUSTERING

Partitioning agglomeration, in German partitioning cluster analysis, is one of the known agglomeration algorithms. k-Means is that the most often used formula during this class. The “K” stands for the number of clusters to be outlined, whereas “means” stands for the average, i.e., wherever the cluster’s center is.

As the name suggests, k-Means appearance for a degree for every one of its clusters at that the variance to all or any encompassing points is as low as doable. This can be tired Associate in Nursing repetitive process:

Initialization: random choice of K centers

Allocation of all knowledge points to the nighest center, measured on a distance metric.

Move the middles to the center of all assigned knowledge points

Go to 2) unless a termination criterion is met

The distance metric is the distance between the info purpose and the cluster center, whereby a spread of calculation ways is used here (e.g., geometrician distance, Manhattan distance). For instance, someone aged eighteen and 160cm is nearer to a different person aged twenty and 170cm than someone aged sixty and 190cm.

If the formula is terminated, i.e., has reached the termination criterion (e.g., range of passes or slight amendment to the previous step), it outputs the middle of the nighest cluster center for every datum.

Common queries in clustering

WHAT IS A DISTANCE METRIC?

The distance metric defines the computation of the “similarity” between 2 points. The only case in the one-dimensional house is the distinction between 2 numerical values (e.g., age): the distinction, the power, and index distinction square measure doable approaches for a distance metric.

It becomes a lot of fascinating once one talks about a few third-dimensional distance metrics. There’s a spread of established distance metrics like the geometrician distance, the Manhattan distance, or the cos distance. As usual, every metric has blessings and downsides.

The space metric plays a central role in each machine learning formula because it defines how sensitively the formula reacts to deviations and how it interprets them.

HOW DO YOU DETERMINE THE OPTIMAL NUMBER OF CLUSTERS?

It is usually unclear what percentage clusters you would like to form. Particularly with partition ways like k-means, you have to choose that “k” you would like to optimize for before.

Ways like the elbow methodology, the typical silhouette model, or the applied mathematics gap methodology square measure are used here. In theory, it’s perpetually a matter of iteratively hard completely different cluster sizes (e.g., a pair of to 10) to search out the quantity that best differentiates between the clusters.

In addition to those applied mathematics calculations, in observe, however, completely different cluster sizes square measure usually experimented with to search out an appropriate size for the matter at hand.

Here, statistically best analyzes square measure typically neglected since the question from the business (e.g., “I need four newssheet groups”) stipulates conditions or expertise (e.g., “We assume in terms of 3 client ratings”) features a major influence.

To bring all this data along, there are currently progressive approaches in varied code packages that use several optimization ways (e.g., Elbow, Silhouette ..), so issue the maximum frequency range of clusters as a recommendation. In R, for instance, this can be NbClust () within the package of constant name, that compares concerning thirty ways fork-means.

WHAT ROLE DOES DATA QUALITY PLAY IN CLUSTERING?

As in any experimental approach, knowledge quality is very relevant. Since agglomeration works directly on the info and uses them as indicators for the groupings, poor knowledge quality naturally has many serious consequences.

In addition to usually poor knowledge quality, there are isolated cases of outliers. There square measure two factors to think about here. On the one hand, aggregation is similar to noticing outliers, which might be the direct application.

However, if this cannot be the goal, the formula is negatively influenced by outliers. Above all, algorithms that employ direct distance metrics like k-means square measure are vulnerable to outliers. It’s thus vital to look at the info quality critically and to correct it if necessary.

HOW DO I TRAUMATIZE REDUNDANCY (FEATURES WITH HIGH CORRELATION) IN CLUSTERING?

Redundant options, i.e., variables that square measure terribly similar or perhaps utterly constant, influence agglomeration. Within the simplest case, unweighted attributes end up in a feature (e.g., weight) being weighted double (e.g., once in grams and once in kilograms).

Now there square measure many concepts on the way to address this redundancy/correlation of options. The primary method is to become awake to a correlation, i.e., spot correlations employing a matrix or similar analyses. There are agglomeration distance metrics that use correlations between metrics like distance, specifically the Mahalanobis distance.

In addition to the insight that it’s thus, you have to choose whether or not and, if so, a way to correct it. In several cases, one would love to exclude redundant options or, even higher, to weigh them down. The second has the advantage that minimal interaction influences aren’t removed. That is that the case with a complete exclusion.

In general, it ought to be aforesaid that prime correlations will place a heavyweight on a precise metric that’s undesirable. It’s thus vital to acquaint yourself intensively with the on-the-market knowledge beforehand to spot redundancies.

HOW DO I TRAUMATIZE AN OUTSIDE RANGE OF VARIABLES?

A very high range of variables typically ends up in a long runtime of the algorithms. It probably ends up in the very fact that minimal effects square measure accustomed differentiate the clusters. What’s usually used maybe a principal element analysis (PCA) for feature choice. This choice of metrics checks that options trigger a high variance inside the info within the initial place. Thus, the quantity of variables is reduced, and alternative issues are avoided.

WHICH PREPROCESSING IS COMMON IN CLUSTERING?

The preprocessing steps depend upon the agglomeration formula used. However, some general steps should be performed in advance:

Missing knowledge/missing data: If individual data entries square measure missing, a way to traumatize them should be determined. For instance, missing knowledge is eliminated by removing the entries, attributes, or imputations.

Curse of dimensionality: In brief, an high range of options will have negative effects. Thus one tries to stay the quantity to a most of approx. 20-30 options.

Data normalization: knowledge in Associate in Nursing output format will have an enormous impact on the calculation results of the distances. For instance, a distance within the “Weight” variable features a larger impact than “Length in millimeters.” Therefore, one normalizes the info, for instance, victimization z-transformation.

- Categorical Variables: Not all algorithms will method categorical options, for instance, “gender”. consequently, the info should either be transferred in numbers (e.g., “large/small” in “10/100”) or victimization one-hot cryptography in binary variables (e.g., “black/green” in “black -> 1/0, green”) -> 0/1).